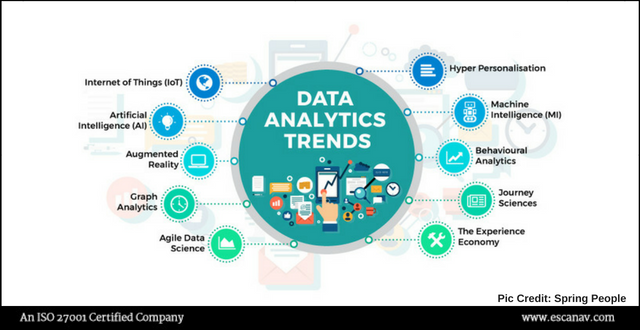

As businesses transform into data-driven enterprises, data technologies and strategies need to start delivering value. The following data analytics trends to watch in 2018.

Together with social, mobile and cloud, data analytics and associated data technologies have emerged as core business disruptors in the digital age. As companies began the shift from being data-generating to data-powered organizations in 2017, data and analytics became the center of gravity for many enterprises. In 2018, these technologies need to start delivering value. Here are the approaches, roles, and concerns that will drive data analytics strategies in the year ahead.

Data leak possibilities need monitoring

Data has been accumulated in the enterprise over years. The internet of things (IoT) accelerates the creation of data as data sources moving from web to mobile to machines, where data leak is easily possible.

This has created a dire need to scale out data pipelines in a cost-effective way,” says Guy Churchward, CEO of real-time streaming data platform provider Data Torrent.

For many enterprises, buoyed by technologies like Apache Hadoop, the answer was to create data lakes — enterprise-wide data management platforms for storing all of an organization’s data in native formats. Data lakes promised to break down information silos by providing a single data repository the entire organization could use for everything from business analytics to data mining. Raw and ungoverned, data lakes have been pitched as a big data catch-all and cure-all.

But while data lakes have proven successful for storing massive quantities of data, gaining actionable insights from that data has proven difficult.

“The data lake served companies fantastically well through the data ‘at rest’ and ‘batch’ era,” Churchward says. “Back in 2015, it started to become clear this architecture was getting overused, but it’s now become the Achilles heel for real real-time data analytics. Parking data first, then analyzing it immediately puts companies at a massive disadvantage. When it comes to gaining insights and taking actions as fast as the computer can allow, companies relying on stale event data create a total eclipse on visibility, actions, and any possible immediate remediation. This is one area where ‘good enough’ will prove strategically fatal.”

Monte Zweben, CEO of Splice Machine, agrees.

“The Hadoop era of disillusionment hits full stride, with many companies drowning in their data lakes, unable to get an ROI because of the complexity of duct-taping Hadoop-based compute engines,” Zweben predicts for 2018.

To survive 2018, data lakes will have to start proving their business value, says Ken Hoang, vice president of strategy and alliances at data catalog specialist Alation.

“The new dumping ground of data — data lakes — has gone through experimental deployments over the last few years, and will start to be shut down unless they prove that they can deliver value,” Hoang says. “The hallmark for a successful data lake will be having an enterprise catalog that brings information discovery, AI, and information stewarding together to deliver new insights to the business.”

However, Hoang doesn’t believe all is lost for data lakes. He predicts data lakes and other large data hubs can find a new lease on life with what he calls “super hubs” that can deliver “context-as-a-service” via machine learning.

“Deployments of large data hubs over the last 25 years (e.g., data warehouses, master data management, data lakes, Salesforce and ERP) resulted in more data silos that are not easily understood, related, or shared,” Hoang says. “A hub of hubs will bring the ability to relate assets across these hubs, enabling context-as-a-service. This, in turn, will drive more relevant and powerful predictive insights to enable faster and better operational business results.”

Ted Dunning, the chief application architect for MapR, predicts a similar shift: With big data systems becoming a center of gravity in terms of storage, access, and operations, businesses will look to build a global data fabric that will give comprehensive access to data from many sources and to computation for truly multi-tenant systems.

“We will see more and more businesses treat computation in terms of data flows rather than data that is just processed and landed in a database,” Dunning says. “These data flows capture key business events and mirror business structure. A unified data fabric will be the foundation for building these large-scale flow-based systems.”

These data fabrics will support multiple kinds of computation that are appropriate in different contexts, Dunning says. “The emerging trend is to have a data fabric that provides data-in-motion and data-at-rest needed for multi-cloud computation provided by things like Kubernetes.”

Langley Eide, chief strategy officer of self-service data analytics specialist Alteryx, says IT won’t be left alone on the hook when it comes to making data lakes deliver value: Line-of-business (LOB) analysts and chief digital officers (CDOs) will also have to take responsibility in 2018.

“Most analysts have not taken advantage of the vast amount of unstructured resources like clickstream data, IoT data, log data, etc., that have flooded their data lakes — largely because it’s difficult to do so,” Eide says. “But truthfully, analysts aren’t doing their job if they leave this data untouched. It’s widely understood that many data lakes are underperforming assets – people don’t know what’s in there, how to access it, or how to create insights from the data. This reality will change in 2018, as more CDOs and enterprises want better ROI for their data lakes.”

Eide predicts that 2018 will see analysts replacing “brute force” tools like Excel and SQL with more programmatic techniques and technologies, like data cataloging, to discover and get more value out of the data analytics trends.